Recognizing Text in Image using Firebase Ml kit in a Kotlin app

In the course of your journey as a mobile developer, there are times you might have reasons to create an application with the capacity to recognize texts in images, or as it is popularly called Optical Character Recognition (OCR).

Thanks to the Google Firebase Machine Learning Kit (ML Kit) for Android, this is more possible than ever and the amazing part is that it can be achieved without writing large chunks of code to handle seemingly everything from scratch.

Firebase ML Kit is one of the innovations announced by Google at the Google I/O 19 Conference, alongside other updates such as The upgraded Smart Assistant, Android Q, new smartphones featuring Pixel 3a, Pixel 3a XL and other exciting news.

The Google Firebase Machine Learning Kit has lots of amazing features ranging from text recognition, face detection, barcode scanning, object labeling and classification and a host of others.

For the scope of this tutorial, we will focus solely on Text Recognition(OCR) using Firebase Machine Learning Kit.

You can Checkout demo and source code if you want to refer to it or take a peek as we get started.

OCR With Firebase ML Kit

According to the Official Google Firebase Docs

“ML Kit is a mobile SDK that brings Google’s machine learning expertise to Android and iOS apps in a powerful yet easy-to-use package. Whether you’re new or experienced in machine learning, you can implement the functionality you need in just a few lines of code. There’s no need to have deep knowledge of neural networks or model optimization to get started. On the other hand, if you are an experienced ML developer, ML Kit provides convenient APIs that help you use your custom TensorFlow Lite models in your mobile apps.”

This feature is available on cloud and on-device. The Text recognition API makes it possible for us to recognize any Latin-based language, which is in a text form. One of the usefulness of this feature is that it can be used for the automation of tiring data entry for receipts, credit cards, and business cards, e.t.c.. The Cloud-based API gives developers the ability to extract text from photos of documents. These text can then be used then for the purpose of documents translation or to increase accessibility services.

Getting Started

- Create A New Android Studio Project and also create a New Firebase Project.

In our previous post on Adding Google Firebase to your Application, we outlined the steps to Creating a new Firebase Project and Adding Firebase to your application, simply click HERE to get a refresher.

Add apply plugin: ‘com.google.gms.google-services’ at the bottom of your app-level build.gradle file. Once done with creating a new Firebase Project and adding Firebase to your Application. That will look something like this

dependencies {

...

...

}

apply plugin: 'com.google.gms.google-servicesTo start using the Google Firebase Machine Learning Kit, lets start by adding our Firebase ML kit dependencies. So in our app-level build.gradle file, we will add the following dependency.

dependencies {

...

implementation 'com.google.firebase:firebase-ml-vision:23.0.0'

}

You can always check for the latest version numbers by clicking here and ensure to stick to the latest, to get the best experiences.

- The Next step is not Compulsory but recommended according to Firebase. This is due to the fact that If you use the on-device API, configure your app to by default, download the ML model to the device after your app is installed from the Play Store. This ensures that you can perform Text Recognition without having Internet connection next time.

This can be done by adding the following code to our application’s AndroidManifest.xml file

<application ...>

...

<meta-data

android:name="com.google.firebase.ml.vision.DEPENDENCIES"

android:value="ocr" />

</application>

Now lets go ahead and add our internet permission to our manifest file.

<uses-permission android:name="android.permission.INTERNET"/>Now lets go ahead to build out a simple user interface for our application. This is what our activity_main.xml file looks like this.

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout

xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

android:padding="15dp">

<ImageView

android:id="@+id/ocrImageView"

android:layout_width="match_parent"

android:layout_height="250dp"

android:src="@drawable/loop"

android:layout_centerHorizontal="true"/>

<LinearLayout

android:id="@+id/actionContainer"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@+id/ocrImageView"

android:weightSum="2"

android:orientation="horizontal">

<Button

android:id="@+id/selectImageBtn"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Select Image"

android:layout_gravity="left"

android:layout_weight="1"/>

<Button

android:id="@+id/processImageBtn"

android:layout_weight="1"

android:layout_gravity="right"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Recognize Text" />

</LinearLayout>

<EditText

android:id="@+id/ocrResultEt"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_below="@+id/actionContainer"

android:background="@android:color/transparent"

android:gravity="top"

android:inputType="textMultiLine"

android:padding="10dp"

android:hint="Detected text will appear here."/>

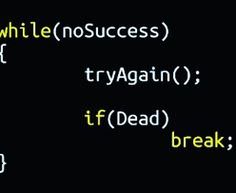

</RelativeLayout>In our xml file above we have two buttons and a single image view, in the Imageview, I have added an image to be loaded by deafault, in which the user can use to extract text, the user can change this image by clicking on the pick Image button, to pick an image from Gallery. Download the image below and save it as loop.jpg.

After that, lets head over to our MainActivity file. Copy and patse the following code to your main activity. We will start by declaring and initializing our EditText and the ImageView which displays the selected Image. Both of these fields will be declared above the Oncreate and initialized in our onCreate method.

lateinit var ocrImage: ImageView

lateinit var resultEditText: EditText

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

resultEditText = findViewById(R.id.ocrResultEt)

ocrImage = findViewById(R.id.ocrImageView)

//set an onclick listener on the button to trigger the @pickImage() method

selectImageBtn.setOnClickListener{

pickImage(selectImageBtn)

}

//set an onclick listener on the button to trigger the @processImage method

processImageBtn.setOnClickListener{

processImage(processImageBtn)

}

}

Here we have also called our selectImage Button and ProcessImage buttona and added an onClickListener on both of them, to call their respective methods. Lets take a look at those two methods.

- pickImage() – This method will be triggered once the “Select Image” button is clicked. It uses an intent, to send the user to the gallery to pick an image that he wants to extract to recognize text found in the selected image.

- onActivityResult() – Since the user creates an intent by clicking on the selectImage button, this method will be triggered automatically when the user will finish picking an image for text recognition. So when user picks an image, we will load the image into our ImageView.

- processResultText() – In this method, we create a loop to run through all the text blocks recognized by FirebaseVision and display them into our EditText.

- processImage() – This method is triggered after clicking the “Recognize Text” button. This method takes the Bitmap which is found on the ImageView image and pass it to FirebaseVision to start the recognition process. If process is complete then we call our next method, which is the processResultText().

Now Let’s explain these processes a little to aid comprehension. In order to be able to recognize text from a photo, we need to create a FirbaseVersionImage object and this could be from either a media.Image, ByteBuffer, a Bitmap or a byte array. In our case we use the bitmap placed on our ImageView.

val bitmap = (ocrImage.drawable as BitmapDrawable).bitmap

val image = FirebaseVisionImage.fromBitmap(bitmap)After this, we create and obtain an instance of FirebaseVisionTextRecognizer. For this example we are going to employ the on-device model, and this will look something like this.

val detector = FirebaseVision.getInstance().onDeviceTextRecognizerIn order to make use of the cloud-based model, you would instead use this line of code.

val detector = FirebaseVision.getInstance().cloudTextRecognizerBut this will require that you enable Vision API from Google Cloud Platform. Some of the contrasting features of on-device and cloud based model you should be aware of includes the following.

| On-Device | Cloud-Based |

| Pricing: Free | Free for first 1000 uses of this feature per month: see Pricing. |

| Recognizing sparse text in images. | High-accuracy text recognition. Recognizing sparse text in images. Recognizing densely-spaced text in pictures of documents. |

| Recognizes Latin Characters. | Recognizes and identifies a broad range of languages and special characters. |

After that we’re going to pass the FirebaseVisionImage to the processImage() function. This is done with the following lines of code

detector.processImage(image)

.addOnSuccessListener { firebaseVisionText ->

v.isEnabled = true

processResultText(firebaseVisionText)

}

.addOnFailureListener {

v.isEnabled = true

resultEditText.setText("Failed")

}

} else {

Toast.makeText(this, "Select an Image First", Toast.LENGTH_LONG).show()

}In the last method, we simply loop through the result and render it on our EditText. This is what our MainActivity code looks like.

package com.example.ocr

import android.app.Activity

import android.content.Intent

import android.graphics.drawable.BitmapDrawable

import androidx.appcompat.app.AppCompatActivity

import android.os.Bundle

import android.view.View

import android.widget.EditText

import android.widget.ImageView

import android.widget.Toast

import com.google.firebase.ml.vision.FirebaseVision

import com.google.firebase.ml.vision.common.FirebaseVisionImage

import com.google.firebase.ml.vision.text.FirebaseVisionText

import kotlinx.android.synthetic.main.activity_main.*

class MainActivity : AppCompatActivity() {

lateinit var ocrImage: ImageView

lateinit var resultEditText: EditText

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

resultEditText = findViewById(R.id.ocrResultEt)

ocrImage = findViewById(R.id.ocrImageView)

//set an onclick listener on the button to trigger the @pickImage() method

selectImageBtn.setOnClickListener{

pickImage()

}

//set an onclick listener on the button to trigger the @processImage method

processImageBtn.setOnClickListener{

processImage(processImageBtn)

}

}

fun pickImage() {

val intent = Intent()

intent.type = "image/*"

intent.action = Intent.ACTION_GET_CONTENT

startActivityForResult(Intent.createChooser(intent, "Select Picture"), 1)

}

override fun onActivityResult(requestCode: Int, resultCode: Int, data: Intent?) {

super.onActivityResult(requestCode, resultCode, data)

if (requestCode == 1 && resultCode == Activity.RESULT_OK) {

ocrImage.setImageURI(data!!.data)

}

}

fun processImage(v: View) {

if (ocrImage.drawable != null) {

resultEditText.setText("")

v.isEnabled = false

val bitmap = (ocrImage.drawable as BitmapDrawable).bitmap

val image = FirebaseVisionImage.fromBitmap(bitmap)

val detector = FirebaseVision.getInstance().onDeviceTextRecognizer

detector.processImage(image)

.addOnSuccessListener { firebaseVisionText ->

v.isEnabled = true

processResultText(firebaseVisionText)

}

.addOnFailureListener {

v.isEnabled = true

resultEditText.setText("Failed")

}

} else {

Toast.makeText(this, "Select an Image First", Toast.LENGTH_LONG).show()

}

}

private fun processResultText(resultText: FirebaseVisionText) {

if (resultText.textBlocks.size == 0) {

resultEditText.setText("No Text Found")

return

}

for (block in resultText.textBlocks) {

val blockText = block.text

resultEditText.append(blockText + "\n")

}

}

}

Now run your application and see how it works. Please note that immediately after you install the application you will have to wait a little for download of the on-device model, this takes less than a minute to download. You have just succeded in building an OCR application using FIrebase ML Kit for Android

If you found this post on how to build a signature capture application informative or have a question do well to drop a comment below and don’t forget to share with your friends.