Build a Gender/Age prediction Application with React and Clarifai

In this tutorial, I will show you how to build a gender/age prediction Application using react and Clarifai demographics model API.

Overview of the Face Detection Application

The face detection application will receive an image link then send the link to the Clarifai face detection endpoint. When a face is detected, the users rank increments.

You can Checkout demo and source code if you want to refer to it or take a peek as we get started.

Technology

- React 16.13.1

- Clarifai

Prerequisite

- Basic knowledge of HTML, CSS, and JavaScript.

- Basic understanding of ES6 features such as

- Let

- const

- Destructuring

- Arrow functions

- Classes

- Import and Export

- Basic understanding of how to use npm.

- Signup for Clarifai API to get a free API key for 5,000 free operations each month.

Setup React Project

Open cmd at the folder you want to save Project folder and run the command below to install create-react-app globally.

npm install -g create-react-appRun command:

create-react-app project_namecreate-react-app automatically create a react project scaffold with linting and testing set up already.

Run command:

npm install clarifaito add the Clarifai npm package which will be used by our app to communicate with the Clarifai face detection model api.

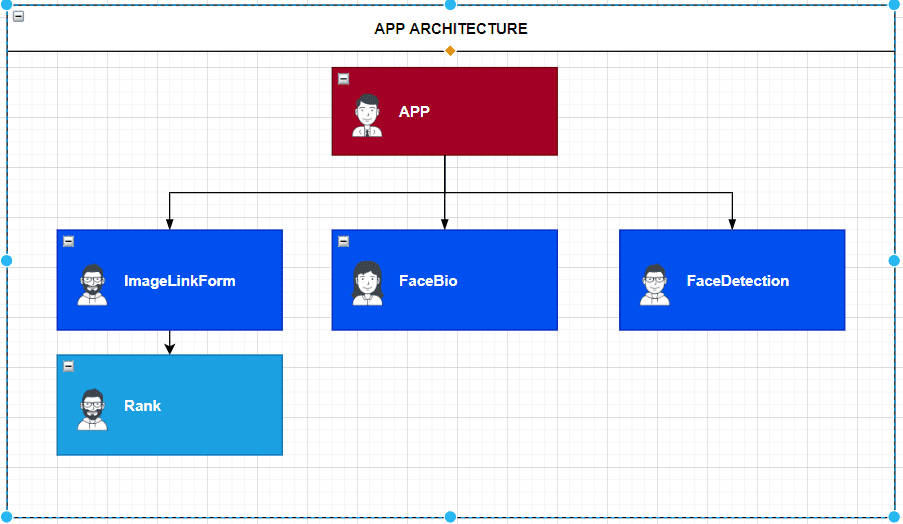

Face Detection Application Architecture

As seen in the diagram above data flows from the parent component down to the children components through props. Props do not permit data flow from child to parent.

Building the Gender/Age Prediction Application

Create React Components for Gender/Age Prediction Application

In the folder src folder, create a components folder containing the following folders: ImageLinkForm, FaceDetection, FaceBio and Rank.

In the ImageLinkForm folder, create the following files: ImageLinkForm.js and ImageLinkForm.css with the code snippet below.

ImageLinkForm.js

import React from 'react';

import './ImageLinkForm.css'

import Rank from '../Rank/Rank'

function ImageLinkForm ({rank,onImageLinkChange, onImageLinkSubmit}) {

return (

<form className="form">

<div className="u-margin-bottom-medium">

<h2 className="heading-primary">

Hello Deven Your current image count is....

</h2>

</div>

<div className="t-center u-margin-bottom-medium">

<Rank rank={rank}/>

</div>

<div className="form__group">

<input

type="text"

className="form__input"

placeholder="Image url"

id="name"

required

onChange={onImageLinkChange}

/>

<label htmlFor="name" className="form__label">Image url</label>

</div>

<div className="form__group">

<button

className="btn btn--green"

onClick={onImageLinkSubmit}

>

Detect→</button>

</div>

</form>

)

}

export default ImageLinkFormAs seen in the Application architecture, the ImageLinkForm component is the parent component to the Rank component. It receives the following props rank, onImageLinkChange, onImageLinkSubmit from the App component and further passes down the rank props to the Rank component. The onchange event handler triggers the onImageLinkChange props which execute the onImageLinkChange method in the App component While the onClick event handler triggers the onImageLinkSubmit props which execute the onImageLinkSubmit in the App component.

ImageLinkForm.css

.form__group:not(:last-child) {

margin-bottom: 2rem;

}

.form__input {

font-size: 1.5rem;

font-family: inherit;

color: inherit;

padding: 1.5rem 2rem;

border-radius: 2px;

background-color: rgba(255, 255, 255, 0.5);

border: none;

border-bottom: 3px solid transparent;

width: 80%;

display: block;

transition: all .8s;

}

.form__input:focus {

outline: none;

box-shadow: 0 1.5rem 2rem rgba(0, 0, 0, 0.5);

border-bottom: 3px solid #55c57a;

}

.form__input:focus:invalid {

border-bottom: 3px solid #ff7730;

}

.form__input::-webkit-input-placeholder {

color: #999;

}

.form__label {

font-size: 1.2rem;

font-weight: 700;

margin-left: 2rem;

margin-top: .7rem;

display: block;

color: inherit;

transition: all .3s;

}

.form__input:placeholder-shown + .form__label {

opacity: 0;

visibility: hidden;

transform: translateY(-4rem);

}

.btn, .btn:link, .btn:visited {

text-transform: uppercase;

display: inline-block;

text-decoration: none;

padding: 1.5rem 4rem;

border-radius: 10rem;

transition: all 0.1s;

border-radius: 10rem;

position: relative;

font-size: 1.6rem;

border: none;

cursor: pointer;

}

.btn:hover {

transform: translateY(-0.3rem);

box-shadow: 0 10px 20px rgba(0, 0, 0, 0.2);

}

.btn:hover::after {

transform: scaleX(1.4) scaleY(1.6);

opacity: 0;

}

.btn:active, .btn:focus {

outline: none;

transform: translateY(-0.1rem);

box-shadow: 0 0.5rem 1rem rgba(0, 0, 0, 0.2);

}

.btn--white {

background-color: #fff;

color: #777;

}

.btn--white::after {

background-color: #fff;

}

.btn--green {

background-color: #55c57a;

color: #fff;

}

.btn--green::after {

background-color: #55c57a;

}

.btn::after {

content: '';

display: inline-block;

height: 100%;

width: 100%;

border-radius: 10rem;

position: absolute;

top: 0;

left: 0;

z-index: -1;

transition: all 3s;

}

.btn--animated {

animation: moveInButton 2s ease-out 0.75;

animation-fill-mode: backwards;

}

.btn-text:link, .btn-text:visited {

font-size: 1.6rem;

color: #55c57a;

display: inline-block;

text-decoration: none;

border-bottom: 1px solid #55c57a;

padding: 3px;

transition: all .2s;

}

.btn-text:hover {

background-color: #55c57a;

color: #fff;

box-shadow: 0 1rem 2rem rgba(0, 0, 0, 0.15);

transform: translateY(-2px);

}

.btn-text:active {

box-shadow: 0 0.5rem 1rem rgba(0, 0, 0, 0.15);

transform: translateY(0);

}

In the FaceDetection folder, create the following files:FaceDetection.js and FaceDetection.css with the code snippet below.

FaceDetection.js

import React from 'react'

import './FaceDetection.css';

function FaceDetection ({imageUrl, faceBox}) {

return (

<div className="img-detect">

<img id='image' src={imageUrl} alt="" width="100%" />

<div className="face-box" style={{top:faceBox.topRow, left:faceBox.leftCol, right:faceBox.rightCol, bottom:faceBox.bottomRow}}></div>

</div>

)

}

export default FaceDetectionThe FaceDetection component receives the following props imageUrl, faceBox from the App component. This component indicates the location of the face detected in an image by the Clarifai face detection API.

FaceDetection.css

.Img-detect:not(:last-child) {

margin-bottom: 2rem;

}

.img-detect {

position: relative;

}

.face-box {

position: absolute;

border: 1px solid #09a1ff;

box-shadow: 0 0 5px #043b5e inset;

display: flex;

flex-wrap: wrap;

justify-content: center;

cursor: pointer;

}In the Rank folder, create the following files: Rank.js and Rank.css with the code snippet below.

Rank.js

import React from 'react'

import './Rank.css'

function Rank ({rank}) {

return (

<h2 className=" heading-secondary">

#{rank}

</h2>

)

}

export default RankThe Rank component receives the rank props which pass from the App component through the ImageLinkForm component.

Rank.css

.heading-secondary {

font-size: 2rem;

text-transform: uppercase;

font-weight: 700;

display: inline-block;

-webkit-background-clip: text;

letter-spacing: .1rem;

transition: all .2s;

background-image: linear-gradient(to right, #7ed56f, #28b485);

color: transparent;

}

@media only screen and (max-width: 56.25em) {

.heading-secondary {

font-size: 2rem;

}

}

@media only screen and (max-width: 37.5em) {

.heading-secondary {

font-size: 0.75rem;

}

}

.heading-secondary:hover {

background-color: none;

}

FaceBio.js

import React from 'react';

import './FaceBio.css';

function FaceBio ({facesBio}) {

return (

<div className="face-bio">

{facesBio.map(faceBio =>{

return (

<div key={faceBio.age} className="bio-box">

<p className="face-bio__text"><span className="face-bio__text--primary">Age: </span>{faceBio.age}</p>

<p className="face-bio__text"><span className="face-bio__text--primary">Gender: </span>{faceBio.gender}</p>

<p className="face-bio__text"><span className="face-bio__text--primary">Multicultural_Appearance: </span>{faceBio.appearance}</p>

</div>

)

})

}

</div>

)

}

export default FaceBio;

FaceBio.css

.face-bio {

padding: 1rem;

background-color: rgba(0, 0, 255, 0.678);

color: black;

}

.face-bio__text {

font-size: 1.5rem;

font-weight: 400;

}

.face-bio__text--primary {

font-weight: bolder;

}

.bio-box {

margin-bottom: 1rem;

padding: 1rem;

background-color: rgb(0, 0, 255);

}App.js

import React, {Component} from 'react';

import FaceBio from './components/FaceBio/FaceBio'

import ImageLinkForm from './components/ImageLinkForm/ImageLinkForm'

import './App.css';

import FaceDetection from './components/FaceDetection/FaceDetection'

import Clarifai from 'clarifai'

// creating an instance of Clarifai

const app = new Clarifai.App({

apiKey: 'YOUR API KEY'

});

let rank = 1;

class App extends Component {

constructor (props) {

super(props);

this.state = {

input: '',

imageUrl: '',

boxes: [],

bios: [],

rank: 0

}

}

//

onImageLinkChange = (event) => {

this.setState({

input: event.target.value

})

}

// Use the response data from Clarifai api call to calculate face location in an image

calculateFaceLocation = (data) => {

const clarifaiFaces = data.outputs[0].data.regions.map(face => {

const faceBox = face.region_info.bounding_box;

const image = document.getElementById('image');

const width = Number(image.width);

const height = Number(image.height);

return {

leftCol: faceBox.left_col * width,

rightCol: width - (faceBox.right_col * width),

topRow: faceBox.top_row * height,

bottomRow: height - (faceBox.bottom_row * height)

}

})

return clarifaiFaces;

}

faceBioDetection = (data) => {

return data.outputs[0].data.regions.map(facesbio => {

const bios = facesbio.data.concepts;

const genders = bios.filter(element => element.vocab_id === "gender_appearance");

const appearances = bios.filter(element => element.vocab_id === "multicultural_appearance")

const ages = bios.filter(element => element.vocab_id === "age_appearance")

const age = ages[0].name;

const gender = genders[0].name;

const appearance = appearances[0].name;

return {

age,

gender,

appearance

}

})

}

displayFaceBio = (bios) => {

this.setState({bios})

}

incrementRank = () => {

this.setState({rank: rank++});

}

displayFaceBox = (faceBoxes) => {

this.setState({boxes: faceBoxes})

}

//Submit our image url to the Clarifai face detection model api

onImageLinkSubmit = (event) => {

event.preventDefault();

this.setState({imageUrl: this.state.input})

//Make request to the clarifai face detection model api endpoint

app.models

.predict(

Clarifai.DEMOGRAPHICS_MODEL,

this.state.input)

.then(response => {

this.displayFaceBox(this.calculateFaceLocation(response))

this.displayFaceBio(this.faceBioDetection(response));

this.incrementRank();

})

.catch(err => console.log(err));

}

render (){

return (

<div className="App">

<section className="section-book">

<div className="row">

<div className="col-1-of-4"> </div>

<div className="col-2-of-4">

<FaceBio facesBio={this.state.bios}/>

</div>

<div className="col-1-of-4"> </div>

</div>

<div className="row">

<div className="book">

<div className="book__form">

<ImageLinkForm

onImageLinkChange={this.onImageLinkChange}

onImageLinkSubmit={this.onImageLinkSubmit}

rank={this.state.rank}

/>

</div>

<div className="detect-image">

<div>

<div className="u-margin-bottom-medium">

<h2 className="heading-secondary">

Experience the power of AI with Images

</h2>

</div>

<FaceDetection faceBoxes={this.state.boxes} imageUrl={this.state.imageUrl}/>

</div>

</div>

</div>

</div>

</section>

</div>

);

}

}

export default App;

TheApp component is the root container for our application, it contains the ImageLinkForm and FaceDetection components. It holds the state of our application.

App.css

*,

*::after,

*::before {

padding: 0px;

margin: 0px;

box-sizing: inherit;

}

html {

font-size: 62.5%;

}

@media only screen and (max-width: 75em) {

html {

font-size: 56.25%;

}

}

@media only screen and (max-width: 56.25em) {

html {

font-size: 50%;

}

}

@media only screen and (min-width: 112.5em) {

html {

font-size: 75%;

}

}

body {

box-sizing: border-box;

padding: 3rem;

}

@media only screen and (max-width: 56.25em) {

body {

padding: 0;

}

}

body {

font-family: "lato", sans-serif;

font-weight: 400;

/* font-size: 16px; */

line-height: 1.7;

color: #777;

}

.section-book {

padding: 15rem 0;

background-image: linear-gradient(to right bottom, #7ed56f, #28b485);

}

@media only screen and (max-width: 56.25em) {

.section-book {

padding: 10rem 0;

}

}

.book {

background-image: linear-gradient(90deg, rgba(255, 255, 255, 0.9) 0%, rgba(255, 255, 255, 0.9) 50%, transparent 50%), url(./img/nat-4.jpg);

background-size: cover;

border-radius: 3px;

box-shadow: 0 1.5rem 4rem rgba(0, 0, 0, 0.25);

}

.book::after {

content: "";

clear: both;

display: table;

}

@media only screen and (max-width: 75em) {

.book {

background-image: linear-gradient(90deg, rgba(255, 255, 255, 0.9) 0%, rgba(255, 255, 255, 0.9) 50%, transparent 50%), url(./img/nat-4.jpg);

background-size: cover;

}

}

@media only screen and (max-width: 56.25em) {

.book {

background-image: linear-gradient(to right, rgba(255, 255, 255, 0.9) 0%, rgba(255, 255, 255, 0.9) 100%), url(./img/nat-4.jpg);

}

}

.book__form {

float: left;

width: 50%;

padding: 6rem;

}

@media only screen and (max-width: 75em) {

.book__form {

width: 50%;

}

}

@media only screen and (max-width: 56.25em) {

.book__form {

width: 100%;

}

}

.detect-image {

float: right;

width: 50%;

padding: 6rem;

}

@media only screen and (max-width: 75em) {

.detect-image {

width: 50%;

}

}

@media only screen and (max-width: 56.25em) {

.detect-image {

width: 100%;

}

}

.row {

max-width: 114rem;

margin: 0 auto;

}

.row:not(:last-child) {

margin-bottom: 8rem;

}

@media only screen and (max-width: 56.25em) {

.row:not(:last-child) {

margin-bottom: 6rem;

}

}

.row [class^="col-"] {

float: left;

}

.row [class^="col-"]:not(:last-child) {

margin-right: 6rem;

}

@media only screen and (max-width: 56.25em) {

.row [class^="col-"]:not(:last-child) {

margin-right: 0;

margin-bottom: 6rem;

}

}

@media only screen and (max-width: 56.25em) {

.row [class^="col-"] {

width: 100% !important;

}

}

@media only screen and (max-width: 56.25em) {

.row {

max-width: 50rem;

padding: 0 3rem;

}

}

.row::after {

content: "";

display: table;

clear: both;

}

.row .col-1-of-2 {

width: calc((100% - 6rem) / 2);

}

.row .col-1-of-3 {

width: calc((100% - 2 * 6rem) / 3);

}

.row .col-2-of-3 {

width: calc((2 * (100% - 2 * 6rem) / 3) + 6rem);

}

.row .col-1-of-4 {

width: calc((100% - 3 * 6rem) / 4);

}

.row .col-2-of-4 {

width: calc((2 * (100% - 3 * 6rem) / 4) + 6rem);

}

.row .col-3-of-4 {

width: calc((3 * (100% - 3 * 6rem) / 4) + 2* 6rem);

}

.u-margin-bottom-big {

margin-bottom: 8rem !important;

}

.u-margin-bottom-small {

margin-bottom: 1.5rem !important;

}

.u-margin-bottom-medium {

margin-bottom: 4rem !important;

}

@media only screen and (max-width: 56.25em) {

.u-margin-bottom-medium {

margin-bottom: 3rem !important;

}

}

.u-margin-top-big {

margin-top: 8rem !important;

}

@media only screen and (max-width: 56.25em) {

.u-margin-top-big {

margin-bottom: 5rem !important;

}

}

.heading-primary {

font-size: 1.5rem;

text-transform: uppercase;

font-weight: 500;

display: inline-block;

-webkit-background-clip: text;

transition: all .2s;

background-image: linear-gradient(to right, #7ed56f, #28b485);

color: transparent;

}

@media only screen and (max-width: 56.25em) {

.heading-secondary {

font-size: 1.5rem;

}

}

@media only screen and (max-width: 37.5em) {

.heading-secondary {

font-size: 1.5rem;

}

}

.heading-secondary:hover {

background-color: none;

}

.t-center {

text-align: center;

}

Run Gender/Age Prediction Application

You can run our App with the command:

npm startIf the process is successful, the Browser opens with Url: http://localhost:3000/ with our app running.

Conclusion

Today we’ve built a gender/age prediction Application successfully with React & Clarifai demographics model API. Now we can consume Clarifai APIs. Feel free to add new features to the app, as this is a great way to learn. Happy coding!!!